My projects

During my studies so far, I have been involved in many hardware and software projects, either individually or as a part of a team. I have listed some of my selected projects below.

Post-doc projects

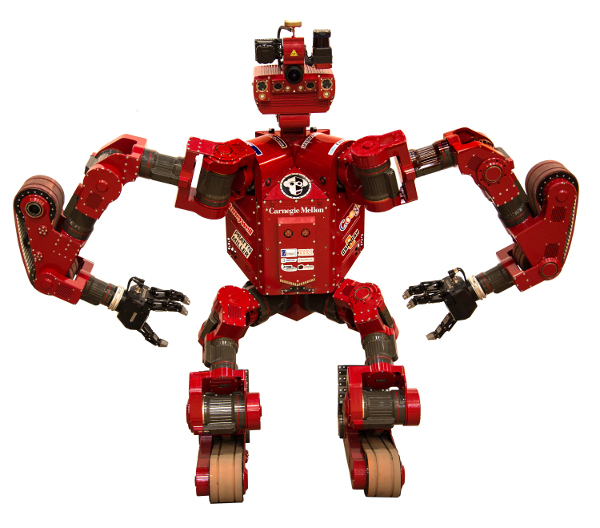

CHIMP

As a member of the Tartan Rescue Team, at the National Robotics Engineering Center at Carnegie Mellon's Robotics Institute, I have been working on the CHIMP robot as part of the efforts towards competing in the DARPA Robotics Challenge Finals. CHIMP (CMU Highly Intelligent Mobile Platform) is a human-size robot that, when standing, is 5-foot-2-inches tall and weighs about 400 pounds. It is not a dynamically balanced walking robot, but is a statically stable robot designed to move on tank-like treads affixed to each of its four limbs. When it needs to operate power tools, turn valves, or otherwise use its arms, CHIMP can stand and roll on its leg treads.

As a member of the Tartan Rescue Team, at the National Robotics Engineering Center at Carnegie Mellon's Robotics Institute, I have been working on the CHIMP robot as part of the efforts towards competing in the DARPA Robotics Challenge Finals. CHIMP (CMU Highly Intelligent Mobile Platform) is a human-size robot that, when standing, is 5-foot-2-inches tall and weighs about 400 pounds. It is not a dynamically balanced walking robot, but is a statically stable robot designed to move on tank-like treads affixed to each of its four limbs. When it needs to operate power tools, turn valves, or otherwise use its arms, CHIMP can stand and roll on its leg treads.

Assistive Dexterous Arm (ADA)

At the Personal Robotics Lab at Carnegie Mellon's Robotics Institute, we have been developing the Assistive Dexterous Arm (ADA) in collaboration with the Rehabilitation Institute in Chicago. ADA is built upon the joystick controlled assistive robot arm MICO manufactured by Kinova Robotics. We augment the arm with multi-modal perception and world modeling capabilities, in-house developed state-of-the-art motion planning algorithms, and a sliding autonomy based novel control and decision making framework. The grand vision of the project is to have ADA continuously learn about the daily routines of the users as well as their personalities and preferences while accumulating perception, manipulation, and task execution experience.

At the Personal Robotics Lab at Carnegie Mellon's Robotics Institute, we have been developing the Assistive Dexterous Arm (ADA) in collaboration with the Rehabilitation Institute in Chicago. ADA is built upon the joystick controlled assistive robot arm MICO manufactured by Kinova Robotics. We augment the arm with multi-modal perception and world modeling capabilities, in-house developed state-of-the-art motion planning algorithms, and a sliding autonomy based novel control and decision making framework. The grand vision of the project is to have ADA continuously learn about the daily routines of the users as well as their personalities and preferences while accumulating perception, manipulation, and task execution experience.

Grad projects

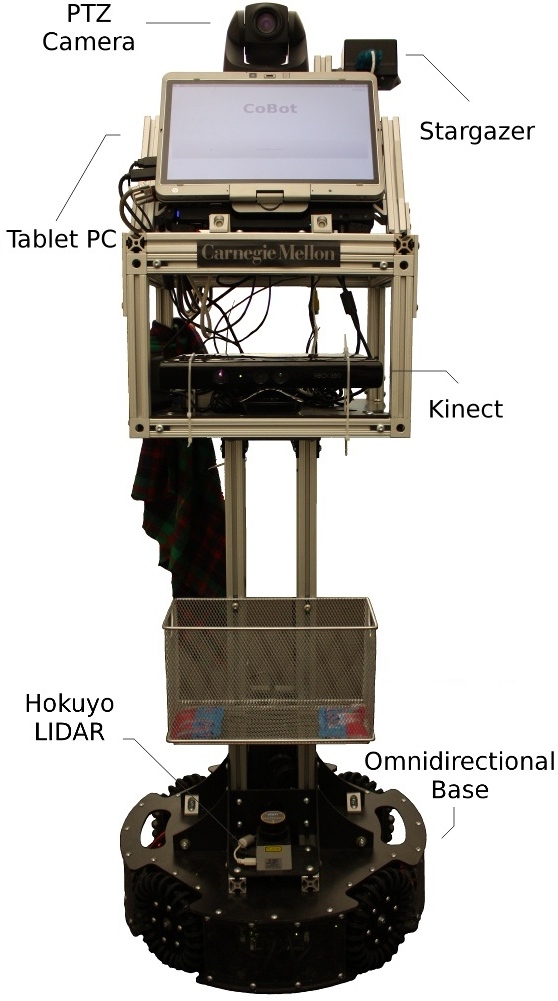

CoBot

CoBots are autonomous mobile service robots that are capable of performing service tasks, such as transporting small items, delivering messages, escorting visitors, and giving tours. As of now, they have limited capabilities (e.g. they do not have robotic manipulator arms); however, they are aware of their limitations and they utilize the concept of symbiotic autonomy to achieve their tasks. That is, they proactively ask for help from humans when their abilities fall short, for instance, for calling the elevator or loading items to their baskets. CoBot is the main mobile platform that I use in my experiments for my thesis work where I focus on experience-based mobile push manipulation. In addition to using them for my experiments, I was also involved in the deployment process of the CoBots during my visit to Carnegie Mellon University, logging lots of data and analyzing them to extract some important statistics.

CoBots are autonomous mobile service robots that are capable of performing service tasks, such as transporting small items, delivering messages, escorting visitors, and giving tours. As of now, they have limited capabilities (e.g. they do not have robotic manipulator arms); however, they are aware of their limitations and they utilize the concept of symbiotic autonomy to achieve their tasks. That is, they proactively ask for help from humans when their abilities fall short, for instance, for calling the elevator or loading items to their baskets. CoBot is the main mobile platform that I use in my experiments for my thesis work where I focus on experience-based mobile push manipulation. In addition to using them for my experiments, I was also involved in the deployment process of the CoBots during my visit to Carnegie Mellon University, logging lots of data and analyzing them to extract some important statistics.

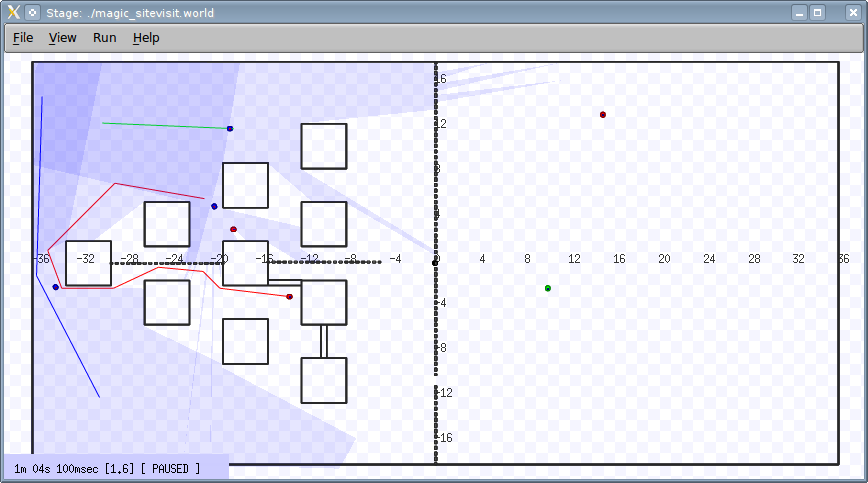

Team Cappadocia - MAGIC 2010

The main aim of the Multi Autonomous Ground-robotic International Challenge (MAGIC 2010) competition was to have teams develop and deploy squads of unmanned vehicle prototypes that autonomously coordinate, plan, and execute a series of timed tasks including classifying and responding to simulated threats and exploring/mapping diverse terrains.

The main aim of the Multi Autonomous Ground-robotic International Challenge (MAGIC 2010) competition was to have teams develop and deploy squads of unmanned vehicle prototypes that autonomously coordinate, plan, and execute a series of timed tasks including classifying and responding to simulated threats and exploring/mapping diverse terrains.

I was a member of Team Cappadocia, a joint effort of ASELSAN (Turkish military electronics company), Boğaziçi University, Bilkent University, and Middle East Technical University from Turkey, and Ohio State University (Control & Intelligent Transportation Research Lab) from the United States.

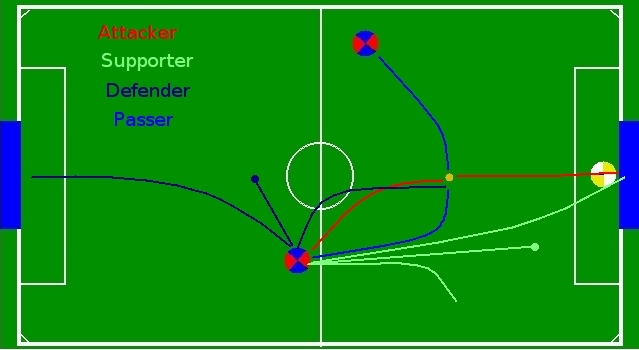

My main responsibility was the development of a dynamic mission planner that would coordinate the sensor and the disrupter robots in the team, compute the optimal paths for each robot, and update the assigned paths accordingly as the knowledge about the environment gets more complete and accurate. The overall concern of the planner was to maximize area coverage and the number of threats neutralized while minimizing the mission completion time and the risk of losing robots during the mission.

This screenshot shows the test map prepared in the Player/Stage environment, where the blue robots represent our team and the red robots represent the objects of interest (OOI). The sensor robots (paths of which are indicated with blue and green) start exploring the partitioned map by visiting the partitions one by one, travelling perpendicular to each other for maximizing the covered area, and the disrupter robot (the path of which is indicated with red) visits and neutralizes all the OOI reported by the sensor robots. If the sensor robots report a closer OOI as the disrupter is on its way for another one, the disrupter plans a new path to the closer OOI and starts heading towards it. This type of re-planning is what makes the mission planner a dynamic one.

Team Cappadocia finished the competition in the 4th place among the 6 finalist teams, which had been selected from among 23 initial applications.

Cerberus Robot Soccer Team

Cerberus is the RoboCup Standard Platform League of Boğaziçi University. I joined the team in 2003 when I was a junior undergrad at a different university and started working on the development of some debugging tools. I developed an omnidirectional quadruped motion engine for AIBO robots as a part of my senior thesis and optimized the gait using GA for the best possible speed and stability. Although my team was the only one competing with the old ERS-210 models against more powerful ERS-7 models, we won the Technical Challenges and became the world champion in that category in 2005.

Cerberus is the RoboCup Standard Platform League of Boğaziçi University. I joined the team in 2003 when I was a junior undergrad at a different university and started working on the development of some debugging tools. I developed an omnidirectional quadruped motion engine for AIBO robots as a part of my senior thesis and optimized the gait using GA for the best possible speed and stability. Although my team was the only one competing with the old ERS-210 models against more powerful ERS-7 models, we won the Technical Challenges and became the world champion in that category in 2005.

After I came back from UT Austin in 2007, I continued with Cerberus as a member and the captain of the team till 2010.

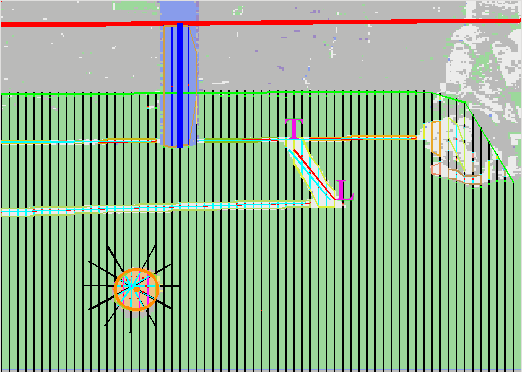

My latest contribution to the team was the development of the entire vision module for the Nao robots from scratch in a couple of weeks.

My good engineering and design skills resulted in a software that utilized the 500MHz Geode processor of the robot to efficiently process the images captured from the robot's camera with full frame rate (30Hz), spending only 12 milliseconds on a frame on average.

The module was able to detect all important objects on the field (and of course not detect anything outside the field), including the field lines and intersections, the ball, the goal bars, and the robots.

My good engineering and design skills resulted in a software that utilized the 500MHz Geode processor of the robot to efficiently process the images captured from the robot's camera with full frame rate (30Hz), spending only 12 milliseconds on a frame on average.

The module was able to detect all important objects on the field (and of course not detect anything outside the field), including the field lines and intersections, the ball, the goal bars, and the robots.

Soccer Without Intelligence

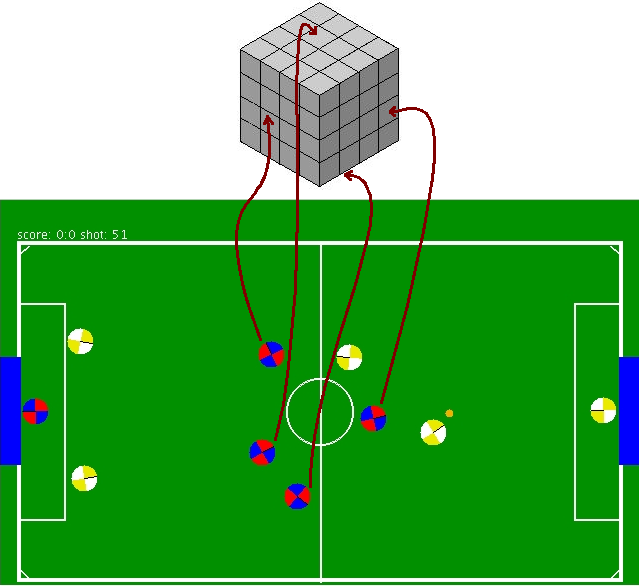

Robot soccer is an excellent testbed to explore innovative ideas and test the algorithms in multi-agent systems (MAS) research. A soccer team should play in an organized manner in order to score more goals than the opponent, which requires well-developed individual and collaborative skills, such as dribbling the ball, positioning, and passing. However, none of these skills needs to be perfect and they do not require highly complicated models to give satisfactory results. In this study, I developed an approach inspired by ants, which are modeled as Braitenberg vehicles for implementing those skills as combinations of very primitive behaviors without using explicit communication and role assignment mechanisms, and applying reinforcement learning to construct the optimal state-action mapping.

Robot soccer is an excellent testbed to explore innovative ideas and test the algorithms in multi-agent systems (MAS) research. A soccer team should play in an organized manner in order to score more goals than the opponent, which requires well-developed individual and collaborative skills, such as dribbling the ball, positioning, and passing. However, none of these skills needs to be perfect and they do not require highly complicated models to give satisfactory results. In this study, I developed an approach inspired by ants, which are modeled as Braitenberg vehicles for implementing those skills as combinations of very primitive behaviors without using explicit communication and role assignment mechanisms, and applying reinforcement learning to construct the optimal state-action mapping.

Experiments demonstrate that a team of robots can indeed learn to play soccer reasonably well without using complex environment models and state representations. After very short training sessions, the team started scoring more than its opponents that use complex behavior codes, and as a result of having very simple state representation, the team could adapt to the strategies of the opponent teams during the games.

Experiments demonstrate that a team of robots can indeed learn to play soccer reasonably well without using complex environment models and state representations. After very short training sessions, the team started scoring more than its opponents that use complex behavior codes, and as a result of having very simple state representation, the team could adapt to the strategies of the opponent teams during the games.

Vulcan Autonomous Wheelchair

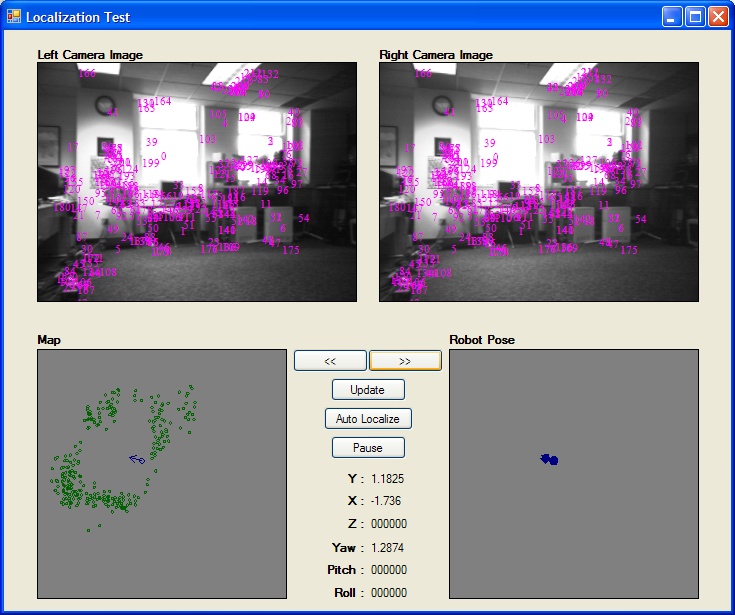

The ability to simultaneously localize a robot and accurately map its surroundings is considered by many to be a key prerequisite of truly autonomous robots. During my MS study at UTCS, as a part of the Intelligent Robotics class taught by Prof. Ben Kuipers, I had the opportunity to implement a 6DoF Visual FastSLAM algorithm for the autonomous wheelchair, Vulcan. I developed a vision-based SLAM method that uses the Scale Invariant Feature Transform (SIFT) features for landmark detection and recognition purposes, and a modified FastSLAM approach for using those landmarks to localize the robot in 3D (i.e. 6-DOF) while creating a map of the environment. The resulting map is essentially a "safety map" that takes into consideration most of the 3D structures in the environment that constitute a potential hazard for the robot, but cannot be detected by

planar laser scans, such as overhangs. Also, by getting localized in 3D, the robot is aware of its complete posture, and hence it can plan its future actions within safety limits.

The ability to simultaneously localize a robot and accurately map its surroundings is considered by many to be a key prerequisite of truly autonomous robots. During my MS study at UTCS, as a part of the Intelligent Robotics class taught by Prof. Ben Kuipers, I had the opportunity to implement a 6DoF Visual FastSLAM algorithm for the autonomous wheelchair, Vulcan. I developed a vision-based SLAM method that uses the Scale Invariant Feature Transform (SIFT) features for landmark detection and recognition purposes, and a modified FastSLAM approach for using those landmarks to localize the robot in 3D (i.e. 6-DOF) while creating a map of the environment. The resulting map is essentially a "safety map" that takes into consideration most of the 3D structures in the environment that constitute a potential hazard for the robot, but cannot be detected by

planar laser scans, such as overhangs. Also, by getting localized in 3D, the robot is aware of its complete posture, and hence it can plan its future actions within safety limits.

Austin Villa Robot Soccer Team

Austin Villa is the RoboCup Standard Platform League of The University of Texas at Austin.

During my MS study at UTCS, I joined the team as one of the core members and worked mainly on the development of an omni-directional quadruped motion engine for AIBO robots as well as developing the planner / behavior module. In addition to the team-related work, I also researched ways of applying various machine learning techniques on those robots for the purposes of collision detection and generation of dynamic kicking motions.

Austin Villa is the RoboCup Standard Platform League of The University of Texas at Austin.

During my MS study at UTCS, I joined the team as one of the core members and worked mainly on the development of an omni-directional quadruped motion engine for AIBO robots as well as developing the planner / behavior module. In addition to the team-related work, I also researched ways of applying various machine learning techniques on those robots for the purposes of collision detection and generation of dynamic kicking motions.

UT - Austin Robot Technology DARPA Urban Challenge Team

During my second year at UTCS, I joined the UT - Austin Robot Technology Team, which successfully participated in the DARPA Urban Challenge in 2007. I worked on rectangular obstacle modeling using the laser data, to be particularly used for the detection of other cars in the traffic for intersection management and parking purposes. Additionally, I was involved in the development of the lane detection module as well as modeling the effects of braking on the vehicle's dynamics. I also TA'd the class named "Autonomous Vehicles: Driving in Traffic", taught by Dr. Peter Stone in Spring 2007.

Undergrad projects

ARMy

The system, called ARMy, consists of a robot arm (a 2-link planar manipulator), a camera, a computer, and round, metal pieces to be collected.

The job of ARMy system is to recognize the red pieces which are randomly scattered over a black surface, position them, and carry them to a user defined position via the electromagnet attached to its tip.

A detailed report of this project can be downloaded here.

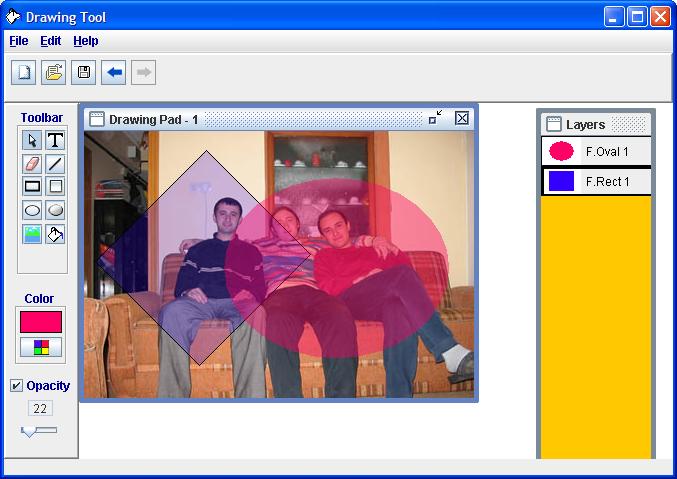

Drawing Tool

Drawing Tool is a software tool that provides some basic drawing functions such as drawing lines, rectangles, ovals, and coloring them. In addition to these functions, it has some advanced functions, such as inserting a picture from a file, rotating and scaling the drawn objects, changing their opacity, and saving them. Since objects are drawn on separate layers, the order of these layers can also be changed by dragging & dropping.

A screenshot from the program is provided below.

The Drawing Tool can be downloaded here.

KickSoffT

KickSoffT is a bird's eye view soccer game which is the successor of Dino Dini's Kick Off. The open-source project OpenKickOff is extended to be KickSoffT by my team, GamesBond. In KickSoffT, the opponent team is an intelligent multi-agent team which uses market driven dynamic task allocation algorithm for assigning a role to each player, and potential field theory for ball control and attack. I coded all of the game logic (AI) part, and some of the graphical and auditory parts of the game.

Here is a screenshot from the game.

KickSoffT can be downloaded here.

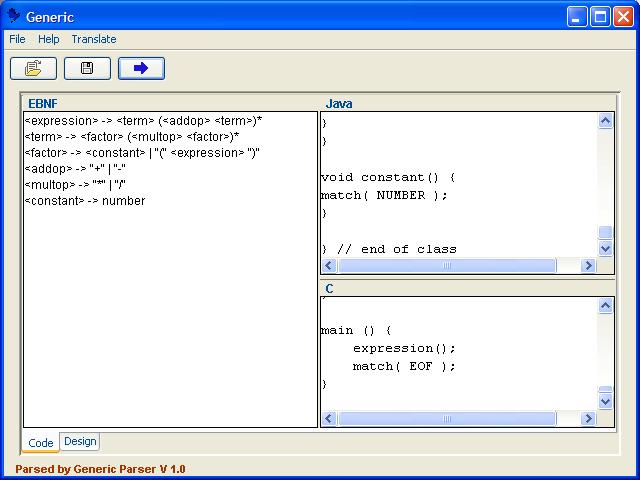

Generic - Parser Generator

Generic is a parser generator that takes a grammar definition in BNF form and generates the corresponding parser code in Java and C.

You can find a sample grammar definition for arithmetic expressions here. Simply click the "Load" button, load this grammar file, and click the "Generate" button to get the parser codes.

A screenshot from the program is provided below.

The Generic project can be downloaded here.

Miscellaneous

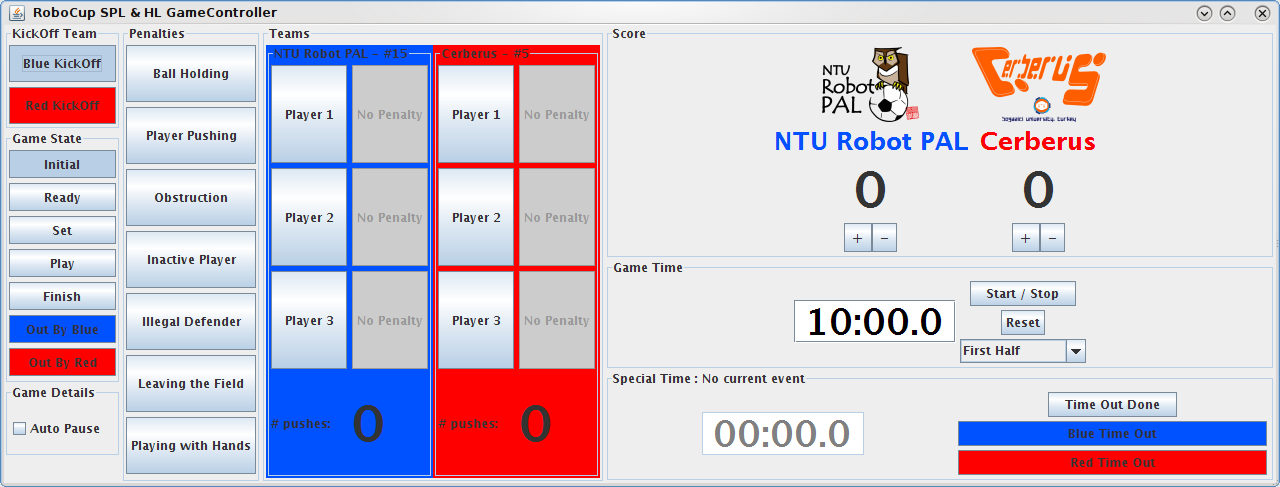

RoboCup GameController

RoboCup GameController is an open source software used in the Standard Platform League and the Humanoid League of RoboCup. Referee commands are sent to the robots on the playing field via wireless network to provide a higher autonomy level for the robots.

I was one of the core developers of that software.

The GameController project can be downloaded here (svn is recommended).